Children Playing

A commonly held belief is that computers are infallible, with algorithms providing perfect answers to questions, and that replacing humans with AIs will make everything easier, faster and safer.

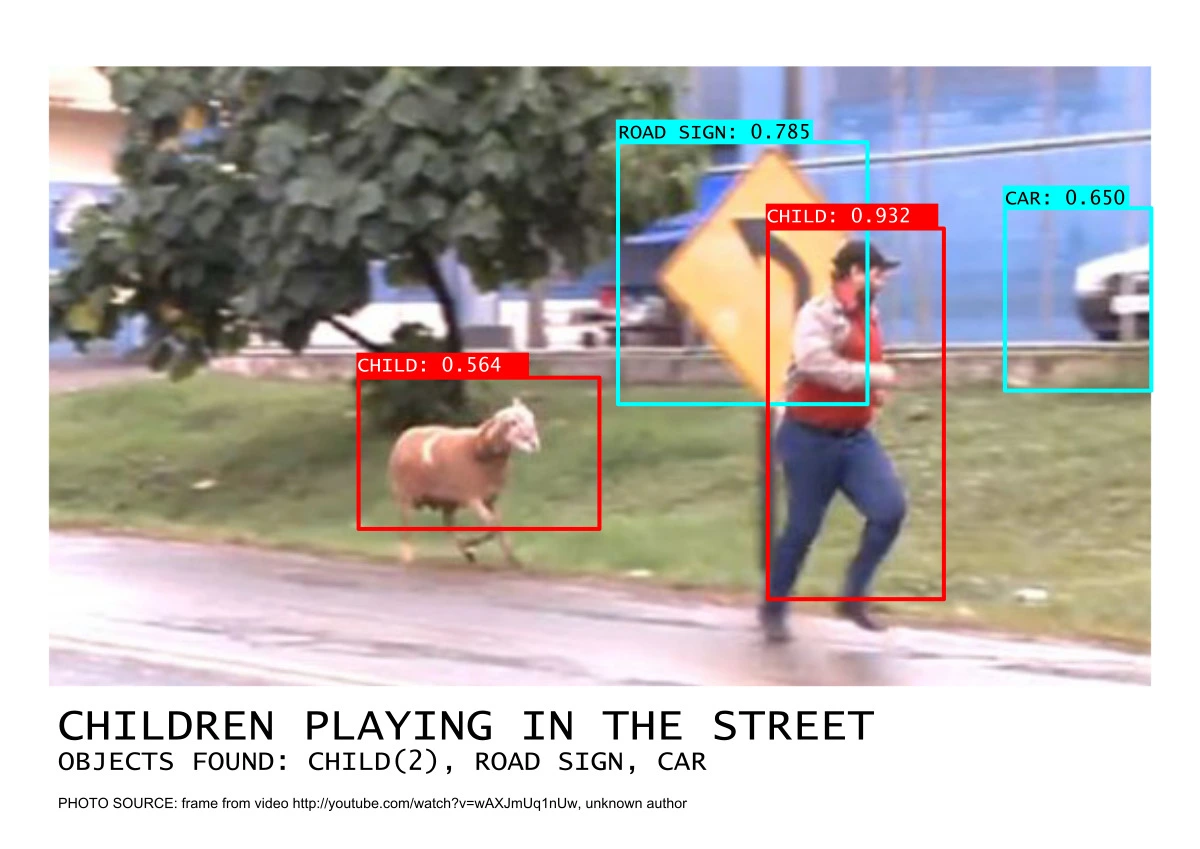

But interpreting the world requires more than correct code execution: we need knowledge of it; and the more we try to incorporate AIs into our daily lives, the more information about the world that we need to feed them with. When providing this knowledge we are subject to the same problems that we have everywhere else, including bias, blindness and ignorance. And while sometimes this leads to funny misinterpretations, they can also have more serious consequences.

In 2015 Google was at the center of a scandal when their image recognition service tagged black people as gorillas. The algorithm had been trained with images taken from the internet, where racist images are found at large. The algorithm did what it was created for: learned from the examples and applied that knowledge to classify new images. In 2018, after anti-immigration protests in the city of Chemnitz, Germany, a video appeared on social networks showing a group of protesters chasing immigrants. Hans-Georg Maaßen, then President of the Federal Office for the Protection of the Constitution, denied seeing any indication of a 'Hetzjagd' (hunt) in the video or other media.

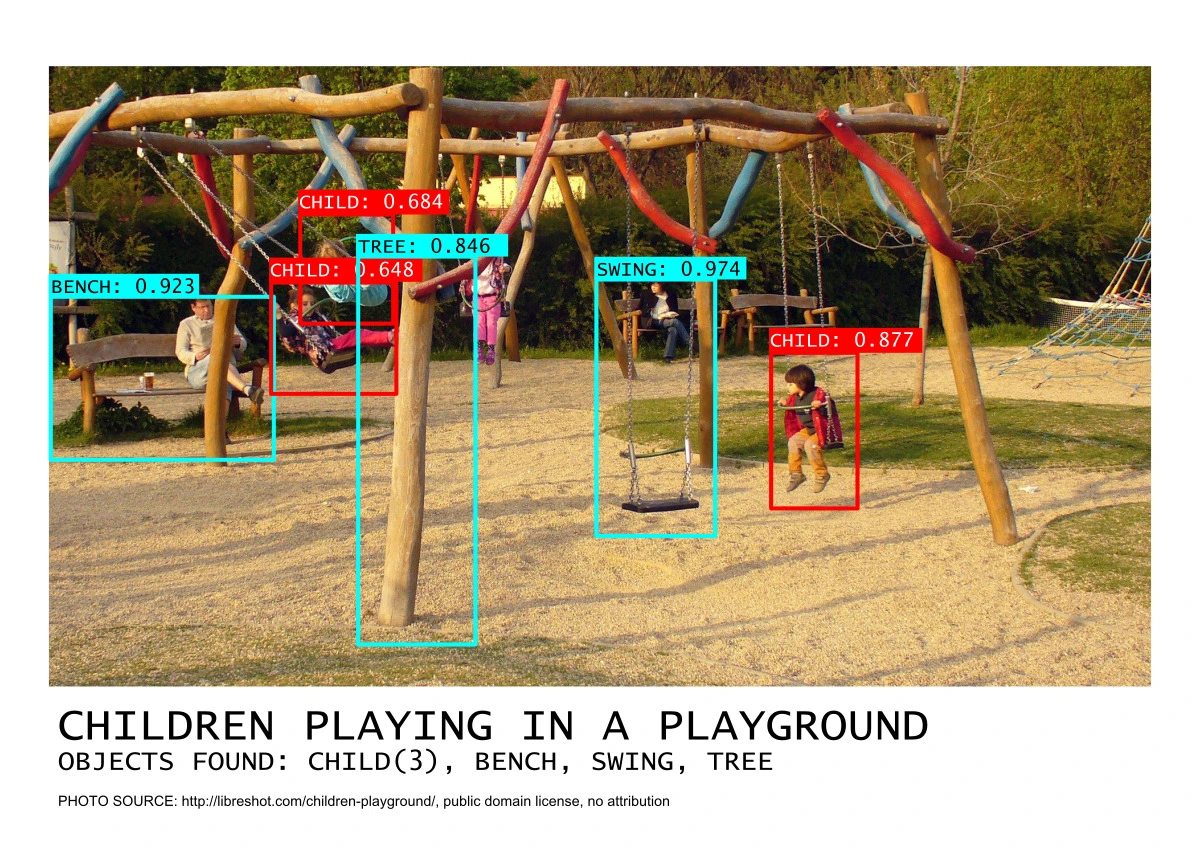

Google's response, it was revealed afterwards, was to simply remove the gorilla tag from all their pictures. Is it impossible even for Google - the single biggest investor in AI in the world - to deal with bias and racism? Are we supposed to depend on these same systems for critical decisions, like driving cars? Maaßen was - leaving political interpretations aside - blind to the situation shown in the video. What will happen if decide to use AIs to detect situations of danger in the street? Who would train them, and which examples would they use?